Want to improve your website’s ranking and get more visitors?

SEO website crawlers can be beneficial! What they are, why they are important, and how to use them to improve your SEO are the main topics of this blog.

After scanning your website, an SEO website crawler looks for on-page and technical SEO issues. Fixing these issues can help your website rank higher and attract more visitors from search engines.

In short, these tools detect basic to more complex website errors so you can correct them and improve your rankings.

Search engines like Google use SEO website crawlers to read and understand websites. But SEO experts can also use them to find problems, discover new opportunities to improve their ranking in search, and even gather insights from competitors.

There are many crawling and scraping tools available online. In this guide, we’ll explain what SEO website crawlers do, how they work, and how to use them safely to improve your website.

What is an SEO Website Crawler?

An SEO website crawler, also called a bot or spider, is a smart tool that scans and studies websites, just like search engines do. These tools show how search engines explore your site and provide useful details about your website’s structure, content, and overall performance.

Crawlers help find problems that might not be obvious but can impact search rankings. They detect issues like broken links, duplicate content, and indexing errors that could affect your website’s visibility.

Why is SEO Website Crawler Important for Website Optimization?

SEO website crawlers are key to improving your website because they:

A. Find Broken Links – They detect links that lead to missing pages, which can hurt both user experience and SEO.

B. Identify Duplicate Content – They spot pages with identical or very similar content, which can confuse search engines and lower your ranking. You can rewrite such content with AI-powered tools like Tarantula to improve SEO.

C. Detect Indexing Problems – They highlight pages that search engines struggle to read, helping ensure all important content is visible and searchable.

What to Consider When Choosing an SEO Website Crawler ?

When picking an SEO website crawler, certain features can help you get better results:

➕ Crawl Depth and Speed

A good tool should scan your entire website, no matter its size, without slowing it down. It should also let you adjust how deep and fast it crawls to avoid overloading your server.

➕ Easy-to-use interface

An intuitive interface makes understanding and acting upon the collected data simpler. Look for tools that present data in an intelligible, actionable way and have dashboards and filtering options that can be customized. With the help of Tarantula, even novices can easily navigate and use it thanks to its user-friendly interface.

➕ Integration Skills

The best crawlers give you a complete picture of how well your website is performing by integrating easily with other crucial tools like Google Analytics and Search Console. This integration makes correlating crawl data with actual user behavior and search performance easier.

➕ Reporting and visualisation features

Good reporting features turn complex data into clear insights. Look for a tool that offers custom reports, visual charts, and different export options. With Tarantula, you can use AI to find hidden SEO opportunities and understand search intent.

Top 9 SEO Website Crawler Tools for 2025

1. Tarantula

Overview: Tarantula is a powerful SEO crawler designed for both large businesses and growing companies. It helps find and fix on-page SEO issues like duplicate meta tags, thin content, and title tag errors. By resolving these problems, you can boost your website’s ranking in search results.

Key Features:

✔ Continuous crawl monitoring

✔ Advanced JavaScript processing

✔ Specific data extraction rules

✔ Automatic API support

✔ Log file analysis integration

Unique Selling Points: Tarantula lets you adjust crawling settings to fit your specific needs.

Pricing : Starts at $27 per month with yearly plans available.

Pros:

✅ Fast and accurate results

✅ Detailed reports

✅ Great customer support

Cons:

❌ Can take time to learn

❌ Higher price compared to basic tools

Best Use Cases: E-commerce platforms and enterprise-level websites

2. Screaming Frog

Overview: A veteran in the industry, Screaming Frog remains the go-to tool for many SEO professionals. For SEO experts who want to conduct thorough site evaluations, this desktop crawler provides an unmatched degree of control.

Key Features:

✔ Custom search options

✔ Advanced filters

✔ Works with popular SEO tools

✔ Supports JavaScript crawling

Unique Selling Points: Provides real-time data analysis while scanning your website.

Pricing : Starting at $259 per year.

Pros:

✅ Free version with some features

✅ Detailed technical reports

Cons:

❌ Uses a lot of computer resources

❌ Can be difficult for beginners

Best Use Cases: Technical SEO audits and small to medium-sized websites.

3. Scrapy

Overview: Scrapy is an open-source web crawling tool built with Python. It’s highly flexible, making it a great choice for developers and SEO experts who want full control over their website data. Unlike paid tools, Scrapy allows complete customization for those with coding skills.

Key Features

✔ Fully customizable crawling settings

✔ Supports plugins for extra features

✔ Can handle different data formats

Unique Selling Points: It gives them control over the crawling process, allowing them to create custom rules and behaviors.

Pricing : Starting at $9 per month per unit

Pros:

✅ Free and open-source

✅ Can be customized for specific needs

Cons:

❌ Requires knowledge of Python

❌ No built-in graphical interface

❌ Harder to learn compared to paid tools

Best Use Cases: Ideal for businesses requiring detailed technical SEO analysis.

4. ParseHub

Overview: ParseHub is more than just a web scraping tool—it’s a powerful SEO crawler designed to handle complex websites with heavy JavaScript. Its easy-to-use visual interface makes it perfect for those without technical skills.

Key Features

✔ Simple point-and-click data selection

✔ Works well with JavaScript and dynamic sites

✔ Allows scheduled crawls with notifications

Unique Selling Points : Uses a visual method to set up crawls and applies machine learning to understand website structures.

Pricing: Starts at $189 per month (annual plans available)

Pros:

✅ Works great for complex and dynamic websites

✅ Cloud-based, so it doesn’t slow down your computer

Cons:

❌ Can be expensive for frequent, large-scale use

❌ Not the best choice for a standard SEO audit

Best Use Cases: E-commerce competitive analysis and price monitoring on competitor websites.

5. Netpeak Spider

Overview: Netpeak Spider is a powerful yet easy-to-use SEO crawler. It’s a desktop tool packed with features that help SEO experts find and fix website issues. It’s especially great at visualizing data and highlighting problems that impact rankings.

Key Features

✔ In-depth technical SEO analysis

✔ Bulk URL scanning

✔ Detailed reports with 100+ parameters

Unique Selling Points : Has an “Issues” tab that ranks SEO problems based on their impact. Also provides ready-to-use templates

Pricing: Starts at $28 per month (bundle deals available)

Pros:

✅ Easy-to-use layout with clear navigation

✅ Great at visualizing complex SEO data

✅ Focuses on fixing important issues first

Cons:

❌ Needs to be installed on a computer

❌ Only works on Windows

Best Use Case: Ideal for SEO agencies and professionals who conduct large-scale audits.

6. Hexometer

Overview: Hexometer is more than just an SEO crawler—it constantly monitors websites to catch problems as they happen. Instead of waiting for scheduled audits, it helps fix issues in real time.

Key Features

✔ Tracks website speed and uptime

✔ Scans for security risks

✔ Detects SEO changes

Unique Selling Points: Provides instant alerts for critical issues and a clear dashboard to monitor website health.

Pricing: Starts at $49 per month (yearly plans are also accessible)

Pros:

✅ Covers technical, security, and SEO issues

✅ Budget-friendly for small businesses

Cons:

❌ Not ideal for one-time deep scans

❌ Less effective for very large websites

Best use cases: Ideal for businesses looking for proactive SEO monitoring and e-commerce sites that require constant uptime.

7. Apify

Overview: Apify is a flexible web scraping and automation tool that SEO professionals can use to collect and analyze custom data. While it’s not just an SEO crawler, it’s useful for gathering SEO-related insights.

Key Features

✔ Platform for web scraping and automation

✔ An easy-to-use visual web scraper

✔ SDK for creating custom crawlers

Unique Selling Points: Can handle complex JavaScript, logins, and rotating proxies to avoid getting blocked.

Pricing: Starts at $49 per month (monthly plans available)

Pros:

✅ Works for many types of data collection

✅ Can scrape even complex websites

Cons:

❌ Not made specifically for SEO audits

❌ May be difficult for non-technical users

Best Use Cases: Ideal for researchers and companies that rely on data.

8. Link-Assistant

Overview: Link-Assistant has evolved into a full-fledged SEO suite with a strong emphasis on link analysis and management. While offering broader SEO capabilities, its link-focused crawling technology sets it apart in the competitive landscape.

Key Features

✔ Finds and analyzes backlinks

✔ Tracks and verifies links automatically

✔ Checks link quality using 50+ metrics

✔ Researches and compares competitor backlinks

Unique Selling Points: Gives a clear plan for better link-building and helps remove harmful backlinks.

Pricing: Starts at $29.10 per month (yearly plans available)

Pros:

✅ More affordable than other link tools

✅ Includes automated outreach for getting backlinks

✅ Detailed reports with easy-to-understand visuals

Cons:

❌ Only available as desktop software (not cloud-based)

❌ Needs manual updates and maintenance

Best Use Cases: Excellent for agencies as well as SEO specialists who are interested in link-building tactics.

9. Lumar (formerly DeepCrawl)

Overview: Lumar is a cloud-based SEO tool that helps businesses analyze and improve their websites. It detects SEO issues, tracks search visibility, and ensures better performance for large sites.

Key Features:

✔ Crawls large websites using the cloud

✔ Finds SEO problems in real time

✔ Compares mobile and desktop performance

Unique Selling Points: Uses AI to suggest SEO improvements and supports large businesses that need scalable solutions.

Pricing : Custom pricing based on website size and business needs

Pros:

✅ Can handle websites with millions of pages

✅ No need to install software (works in the cloud)

✅ Provides in-depth SEO reports that are easy to read

Cons:

❌ Costs more than desktop-based tools

❌ Requires some technical knowledge to use advanced features

Best Use Cases: Excellent for agencies and technical SEO specialists in charge of intricate websites.

Comparison table for SEO Website Crawler Tools

| Tool | Key Features | Unique Selling Points | Pricing |

| Tarantula | Continuous crawl monitoring, JavaScript processing, API support | Adjustable crawling settings | Starts at $27/month |

| Screaming Frog | Custom search options, Advanced filters | Real-time data analysis | Starts at $259/month |

| Scrapy | Customizable crawling settings, Plugin support | Full control over crawling | Starts at $9/month per unit |

| ParseHub | Visual data selection, JavaScript support | Machine learning for website structures | Starts at $189/month |

| Netpeak Spider | Technical SEO analysis, Bulk URL scanning, | Ranks issues by impact | Starts at $20/month |

| Hexometer | Speed tracking, Uptime monitoring, | Real-time issue alerts | Starts at $ 49/month |

| Apify | Web scraping, Automation, SDK support | Handles JavaScript, | Starts at $49/month |

| Link-Assistant | Backlink analysis, Automated link tracking, | Link-building focus | Starts at $29.10/month |

| Lumar | Cloud-based crawling, SEO issue detection, | AI SEO recommendations | Custom pricing |

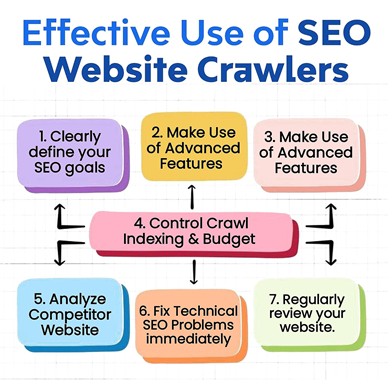

How to Use SEO Website Crawler Effectively

1. Set Clear SEO Goals

- Decide what you want to improve, like fixing broken links, updating meta tags, or making your site faster.

- Pick the best seo website crawler for your needs based on your website’s size and SEO focus.

2. Leverage Advanced Features

- Use JavaScript rendering insights from Tarantula to improve indexability.

- Perform log file analysis to understand how search engines interact with your site and optimize accordingly.

3. Improve Internal Links

- Use reports to find pages with no links (orphan pages) and broken links inside your website.

- Create a smart internal linking plan to make navigation easier and help search engines understand your site better.

4. Manage Crawl Budget & Indexing

- Track which pages Google indexes using Google Search Console and Tarantula.

- Stop search engines from wasting resources on unimportant pages (like duplicate content or admin pages) by updating robots.txt and using canonical tags.

- To enhance SEO website crawler performance and rewrite content, use Tarantula’s built-in AI (ChatGPT).

5. Examine Websites of Competitors

- Leverage platforms like Tarantula and Moz to assess competitor site structures and content strategies.

- Identify gaps and opportunities to enhance your own SEO website crawler approach.

6. Fix Technical SEO Issues Promptly

- Address critical errors like broken links, slow loading times, and redirect chains.

- Ensure mobile-friendliness and responsiveness through Google Search Console insights.

7. Check Your Website Frequently

- To identify issues and maintain the health of your website, run scans frequently.

- Fix major problems like 404 errors, duplicate content, and slow pages. Tarantula is an affordable all-in-one tool that can help.

Best Practices for Using SEO Website Crawlers

Maximizing the benefits of your SEO website crawler requires following established best practices:

1. Consistent Crawling

Set up a routine to check your website’s health. Large websites that change often should be crawled weekly, while smaller ones can be checked monthly. This helps catch problems early so you can fix them before they hurt your rankings or user experience. A tool like Tarantula can make this process easier.

2. Integrate with Other Tools

Don’t rely on just one tool. Connect your SEO website crawler with Google Analytics and Search Console to fully understand your website’s performance. This will help you understand how technical problems affect real users and conversions, giving you better insights for improving your site.

3. Prioritise issues as per their impact

Not all problems are equally serious. Focus on the biggest SEO issues first, such as:

- Broken links (both inside and outside your website)

- Missing or duplicate meta tags

- Server errors

- Mobile usability problems

By handling the most critical issues first, you make sure your SEO website crawler efforts have the biggest impact. Tools like Tarantula can scan your website the way Google does and help find issues affecting your rankings.

4. Maintain an update

SEO tools often update with new features to help improve your website. Always update your tools and stay informed through release notes, webinars, and online communities. SEO changes fast; what worked before might not be as useful today, so learning continuously is important.

Conclusion

SEO website crawlers are more than just tools they are your hidden advantage in the ever-changing competitive online world. By using them smartly and following the right crawling methods, you can turn your low-visibility website into a high-ranking, traffic-boosting success.

Among the most innovative solutions on the market today, Tarantula SEO Spider is among the greatest. With its fast crawling speed, AI-driven issue detection, and easy-to-use reports, it helps websites of all sizes tackle SEO problems with ease.

In a world where search engine algorithms are always changing, the websites that track, adjust, and improve regularly will rise to the top. Your competitors are already using SEO crawlers—don’t fall behind! Choose the right tool, apply the best practices, and take your website from unnoticed to unstoppable.